TMI Operators Took Actions They Were Trained to Take

By Mike Derivan

Editor’s note. This post has a deep background story. The author, Mike Derivan, was the shift supervisor at the Davis Besse Nuclear Power Plant (DBNPP) on September 24, 1977 when it experienced an event that started out almost exactly like the event at Three Mile Island on March 28, 1979.

The event began with a loss of feed water to the steam generator. The rapid halt of heat removal resulted in a primary loop temperature increase, primary coolant expansion and primary system pressure exceeding the set point for a pilot operated relieve valve in the steam space of the pressurizer. As at TMI, that relief valve stayed open after system pressure was lowered, resulting in a continuing loss of coolant. For the first twenty minutes, the plant and operator response at Davis Besse were virtually identical to those at TMI.

After that initial similarity, Derivan had an ah-ha moment and took actions that made the event at Davis Besse turn into an historical footnote instead of a multi-billion dollar accident.

When Three Mile Island happened and details of the event emerged from the fog of initial coverage, Mike was more personally struck than almost anyone else. He has spent a good deal of time during the past 35 years trying to answer questions about the event, some that nagged and others that burned more intensely.

In order to more fully understand the below narrative, please review Derivan’s presentation describing the events at Davis Besse, complete with annotated system drawings to show how the event progressed.

Warning – this story is a little longer and more technical than most of the posts on Atomic Insights. It is intended to be a significant contribution to historical understanding of an important event from a man with a unique perspective on that event. If you are intensely curious about nuclear energy and its history, this story is worth the effort it requires. Mike Derivan (mjd) will participate in the discussion, so please ask for clarification if you still have questions after you have read the story and the background material.

The rest of this post is his story and his analysis, told in his own words.

By Mike Derivan

My first real introduction to the Three Mile Island (TMI) accident happened on Saturday March 31, 1979. At Davis Besse Nuclear Power Plant (DBNPP) in Ohio we heard something serious had happened as early as the day of the event, March 28, and interest was high as it was a sister plant.

Actual details were sketchy for the next couple of days, and mainly by watching the nightly TV news it became clear to me that something serious was going on. It was clear from watching the TV news reports that conflicting information was being reported. Some reports indicated there had been radiation releases and also reports by the utility, Metropolitan Edison the owner of TMI, of no radiation releases.

I even remember hearing the words “core damage” first mentioned. It was that Saturday on a TV news report I saw the first explanation using pictures of the system to the suspected sequence of events and it became clear to me the Pilot Operated Relief Valve (PORV) had stuck open.

My reaction was gut-wrenching and I was also in disbelief that TMI did not know what had happened at Davis Besse. That evening I watched the Walter Cronkite news report.

That report is discussed on this excerpt from Democracy Now on the occasion of the 30th anniversary of the event.

https://www.democracynow.org/embed/story/2009/3/27/three_mile_island_30th_anniversary_of

I sat there with total disbelief as he discussed potential core meltdown. Disbelief because if you were a trained Operator in those days it was pretty much embedded in your head that a core meltdown was not even possible; and here that possibility was staring me right in the face.

Cronkite’s report was also my first exposure to the infamous hydrogen bubble story. I had had enough Loss of Coolant Accident (LOCA) training to understand that some hydrogen (H2) could be generated during Loss of Coolant Accidents; after all we had Containment Vessel hydrogen concentration monitoring and control systems installed at our plant. But the actual described scenario at TMI seemed incredible, except it had apparently happened.

I would expect my reaction was the same as many nuclear plant Operators at that time. With the exception that the apparent initiating scenario had actually happened to me eighteen months earlier at Davis Besse and I just couldn’t get the question out of my mind: “Why didn’t they know”?

Collective Memory Says “Operator Error

Since the time of the TMI accident virtually hundreds of people have stuck their nose into the root cause of the TMI accident. Both the Kemeny and Rogovin investigations identified a lot of programmatic “stuff” that needed to be fixed, and I agree with most of it.

However I feel both of them skirted one important issue by using different flavors of “weasel words” in the discussion of operator error. The two reports handled that specific topic a bit differently, but the discussions got couched with side topics of contributing factors. But the general consensus of all the current discussion summaries I read is TMI was caused by Operator Error.

The TMI operators did make some operator errors and I am not denying that. But my contention is all the errors they made were after the fact that they got outside of the design basis understanding of PWRs at that time. It is no surprise to anyone that when a machine this complicated gets outside of its design basis anything might happen. You basically hope for the best, but you are going to have to take what you get.

Fukushima proves that, and everyone knows why/how Fukushima got outside of their design basis. The how/why TMI Operators got outside of their design basis is going to be the focus of my discussion. I will also discuss the fact that I think this was understood at the time of the investigations, but it was consciously decided not to pursue it.

My whole point of contention is the turning off the High Pressure Injection flow early in the event in response to the increasing pressurizer level is the crux of the whole operator error argument. All discussions say if the operators hadn’t done that, the TMI event would have been a no-never-mind. And I agree.

But nobody really wants to believe they were told to do that for the symptoms they saw.

The Real Root Cause of the TMI Accident

In other words they were told to do that, by their training, compounded by tunnel vision and bad procedure guidance. I have believed this since the day I understood what happened at TMI. Furthermore the TMI Operators were trying to defend their actions from a position of weakness; their core was melted, nobody wanted to believe them.

I am not in a position of weakness on this issue, my event came out okay at DBNPP, and so I have no reason to not be totally honest or objective on this issue. During the precursor event at DBNPP we also turned off High Pressure Injection early in the event in response to the symptoms we saw, and for the same reason the TMI Operators did it eighteen months later; we were told to do it that way.

This fact is apparently a hard pill to swallow. But if it is hard for you to accept, just imagine how I felt watching TMI unfold in real time.

And right there is the crux of the issue. Once those High Pressure Injection pumps were off, both plants were then outside the Design Basis understanding for that particular SBLOCA (small break loss of coolant accident).

So you hope for the best, but take what you get. But still obviously an error has been made if not taking that action would have made the event a no-never-mind.

So who exactly made the error? Both the Kemeny and Rogovin Reports discuss the problems with the B&W Simulator training for the Operators. The important point they both apparently missed (or didn’t want to deal with, which I prefer as the explanation) is that this is really an independent two-part problem.

I will refer to controlling High Pressure Injection during a SBLOCA as part A of the problem, and to the actual physical PWR plant response to a SBLOCA during a leak in the pressurizer steam space as part B of the problem.

It really is that simple. B&W was training correctly for High Pressure Injection control (part A) for SBLOCAs in the water space of their PWR. But neither they nor Westinghouse correctly understood the correct plant response for a SBLOCA in the pressurizer steam space.

By omission they were not training correctly for a SBLOCA in the pressurizer steam space (part B). To make matters worse B&W was overstressing in training the importance of the part A “rules”, to the extent an operator would fail a B&W administered Operator Certification Exam for failure to correctly implement the part A rules.

Thus when fate would have it and the two occurrences, part A and part B, combined in the real world, where the plant responds per the rules of Mother Nature, the B&W training and procedures ended up leading the operators to actions that put them outside the actual design basis, not the falsely perceived (and trained upon) design basis.

Up until very recently my argument has been one using just simple logic and sheer numbers of operators involved. In the Davis Besse September ’77 event there were 5 licensed operators in involved in that decision, either by direct action or complacent compliance. In other words all 5 agreed it was the right thing to do. Of course it wasn’t the right thing to do, but nobody objected because it was the correct part A thing to do and nobody understood the part B of the problem.

Eighteen months later at TMI, March ‘79 an additional number of Operators (just how many depends on the time line) repeated the same initial wrong actions. So we have about a dozen operators, at 2 independent plants eighteen months apart all doing the same thing, and all convinced they were doing the right thing.

Is it even conceivable to think they did not all believe they did the right thing according to part A? I just don’t believe so; of course we are all arguing from a position of weakness. It is the wrong thing to do for part A and part B combined, so nobody really wants to believe we were trained to do it.

But as I explained it is really the two-part problem that created the issue. My point can be further emphasized by the fact that NRC Region III had heartburn over the report DBNPP submitted for our event. They did not like the fact that the report did not say the Operators made an error turning off High Pressure Injection.

I know why that happened. The person most responsible for writing the report narrative was actually in the Control Room during the event. He did not believe the action was wrong based on his same training relative to part A of the problem. So why would he put that statement in the report? He was so convinced his own (complacent) agreement was correct, that saying otherwise would be a false statement.

New Information Revealed by Search

Just recently new information came to my attention that absolutely confirms my belief that B&W was in fact totally emphasizing High Pressure Injection control in their training based solely on their understanding of the part A problem, with no understanding on B&W’s part of the part B problem or its affect when combined with the part A problem.

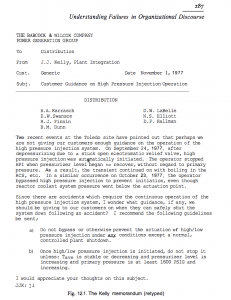

My understanding comes directly from seeing the whole infamous Walters’ response memo of November 10, 1977 to the original Kelly memo of November 1, 1977. It is absolutely remarkable to me that 35 plus years after the DBNPP event and almost the same amount of time after TMI that a totally unrelated Google search turns up a complete version of the Walters memo.

After half a life time of studying all the TMI reports I had only seen one “cherry picked” excerpt from the Walters’ memo, basically saying he agreed with the operator’s response at DBNPP. The whole memo in context basically confirms that the operator claims of “we were trained to do it” are correct.

The original Kelly memo also confirms that Kelly still didn’t grasp the significance of the part B problem, as related to the DBNPP event; or if he did he didn’t relate it thoroughly and clearly in his memo. Both memos are presented and discussed below; make up your own conclusions. (The source document is here.

The Kelly Memo

The referenced source document is basically a critique of these memos by textual communications experts. Here’s a summary. First, Kelly is talking “uphill” in the organization, so he couches his memo with that in mind. He asks no one for a decision, but basically asks for “thoughts”. And he makes a non-emphatic recommendation for “guidelines.”

My personal additional notations are he dilutes the importance of and possibly adds confusion to the recommendation by adding “LPI” to the discussion, but most importantly he totally misses any part B problem discussion. He does say “the operator stopped High Pressure Injection when pressurizer level began to recover, without regard to primary pressure.”

But there is no mention about the fact that the system response was not as expected, e.g. the pressurizer level went up drastically in response to the RCS boiling. He never articulates that the operator’s reluctance to re-initiate High Pressure Injection, even after we understood the cause of the off-scale pressurizer level indication, was based solely on that indicated pressurizer level and our training. Thus the memo totally misses addressing the part B problem point that the system response was not as expected by anybody, which was crucial to getting the guidance fixed.

The other thing I notice is the memo is not addressed to Walters. I’ve also “been there, done that” in a large organization. I can easily understand how the recipient (Walters’ boss) upon receiving this memo, with no specific articulation of a new problem (part B), would pass it to Walters with a “handle it, handle it… make it go away.” I also note the N.S. Elliott on the distribution. He was the B&W Training Department Manager, thus B&W training was directly in the loop on this issue also.

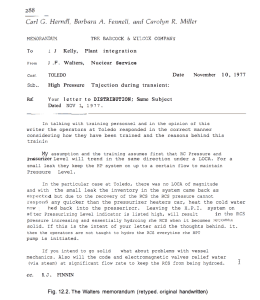

The Walters Response Memo

Note the original Walters’ response memo to Kelly was hand written, so it has been apparently typed someplace along the line. This is how it appears in the reference source, typos and all.

I’m omitting the communications expert’s comments, they are in the reference. Here are my comments. First in simple operator lingo this response is a “smart ass slap down” to Kelly, including all the accompanying sarcasm. But there are some very important admissions revealed here. First, an admission, including Walters’ discussion with the B&W Training Department, that we responded in the correct manor considering how we were trained, and also including the bases behind our training.

This is what we operators had been claiming all along, but nobody wanted to believe it. Second, Walters clearly states both as his personal assumption and the B&W Training Department assumption that RC pressure and pressurizer level will trend in the same direction during a LOCA. Bingo. He has just admitted they don’t get it, still, the specific part B contribution to the problem.

So they are in fact training wrong for this event because they don’t understand part B. Further this discussion is happening after the DBNPP event, as a result of the Kelly concerns, and well before TMI. Third, the tone of Walters’ sarcastic comments about a “hydro” (hydrostatic pressure testing) of the RCS every time High Pressure Injection is initiated shows the disproportional emphasis that the B&W training was placing on “never let High Pressure Injection pump you solid.” Again something the operators were claiming that nobody wanted to believe.

My conclusion, and it hasn’t changed in 35 years, is that the root cause of the TMI accident was the B&W simulator training and inadequate procedures put the TMI operators in a box, outside of their design basis understanding for that specific small break loss of coolant. And a contributing cause is B&W itself didn’t understand the actual plant response to that steam space loss of coolant event because it was never analyzed correctly. Then, they also missed the warning the Davis Besse event provided.

Why Did Official Investigations “Miss” Root Cause?

For a long time I wondered why both the Kemeny and Rogovin investigations didn’t reach the same specific conclusion as I have. After all, both investigations had some very smart people involved in both processes, and they both looked at the same evidence. My thinking today is that they did reach that same conclusion. But I don’t actually know what they may have seen as the bottom line purpose for their investigations either.

If you consider that no investigation report was going to change the condition of TMI, it may have been as simple as there is enough wrong that needs fundamental changing, so let’s just get those changes done and move forward. So neither group saw a need to identify the actual bottom line root cause, rather they just gave recommendations for prevention of another TMI type accident.

Further, by the time those 2 reports were published, it was well understood there was going to be a law suit between GPU and B&W. If one of those reports had specifically identified B&W with partial liability for the root cause, that conclusion along with the report that made it, would be inherently dragged into the law suit.

I have no doubt this was actually discussed at the time. And I will further speculate it was actually decided there was no reason to identify the actual true single root cause in the reports because the law suit itself would decide that liability issue independently of the reports. My problem with that is the law suit, which started in ’82, never really settled the liability issue as it was mutually “settled” in ’83 before a conclusion was reached.

Another thing I think was actually discussed at that time was the fact that if the reports stated the root cause was because the B&W training put the operators outside of the design basis understanding for that event; because the event wasn’t understood by B&W, it would open Pandora’s Box. They didn’t want to deal with “What else do you have wrong?”, and there was well over a hundred billion dollars worth of these NPPs still operating.

This conclusion is strongly reinforced for me by the Kemeny Report section “Causes of the Accident”. This section of the report lists a “fundamental cause” as operator error, and specifically lists turning off High Pressure Injection early in the event. And then the report lists several “Contributing Factors” including B&W missing the warning provided by the Davis Besse event.

If you read the list of contributing factors listed there is a screaming omission; it is never stated B&W (actually the whole PWR industry if you consider the precursors) did not understand the actual plant response to a leak in the pressurizer steam space (what I refer here as part B of the problem). And that is why B&W and the NRC both missed the DBNPP warning. Virtually nothing will ever convince me that all those smart people did not put that truth together.

Thus it was both their fear of opening Pandora’s Box, and a conscious decision that there was no need to implicate B&W with any partial liability ruled the process. By doing that they collectively decided to throw the TMI operators under the bus as the default position.

My conclusion for the missing Contributing Factor problem is an Occam’s razor solution; it is not “missing” at all with respect to they didn’t “Get It”; it was a decision not to include it. After all, if that Contributing Factor had been included, who on earth will believe it is an operator error when they simply did what they were told to do in that situation? So they just simply did not want to deal with the real issue; who made the error?

A Simple Analogy

For years I struggled with finding a simple analogy to explain the position the TMI operators were placed in by their training. One that could be understood by common everyday knowledge everyone was familiar with; not the technical detail that required understanding the complications of nuke plant operations. One of the reasons that was difficult was that it required a “phenomena” that is commonly understood today, but was not understood at all at the time of the training. This is the best I can come up with.

Suppose in learning to drive a car you are being trained to respond to the car veering to the left. It’s simple enough, simply turn the steering wheel to the right to recover. It is also what your basic instinct would lead you to do, so there is no mental conflict in believing it.

It is also actually reinforced and practiced during actual driver training on a curvy road. That response is soon imbedded as the right thing to do. Now suppose your driver training also includes training on a Car Simulator training machine. It is where you learn and practice emergency situation driving. After all, nobody is going to do those emergency things in an actual car on the road.

Here’s where it gets complicated. Assume virtually no one yet understands that when the car skids to the left on ice (because of loss of front wheel steering traction); the correct response is to turn the steering wheel into the skid direction, or to the left. This is just the opposite of the non-ice response. And to make matters worse, because no one understands that yet, including the guy who built the Car Simulator, the Car Simulator has been programmed to make this wrong response work correctly on the Simulator.

So in your emergency driver training you practice it this way, the Simulator responds wrong to the actual phenomena, but it shows the successful result, you recover control. Since this probably also agrees with your instinct, and you see success on the Simulator, this action is also embedded as the right thing to do. One additional point, if you don’t do this wrong action, you will flunk your Simulator driver training test.

So you know where this is going, now you are out driving on an icy road for the first time and the car skids to the left. You respond exactly as you were instructed to do and exactly as the Simulator showed was successful, and you have an accident because the car responds to the real world rules of Mother Nature.

An investigation is obviously necessary because, I forgot to tell you, the car cost $4 billion dollars and you don’t own it. During the subsequent investigation everything is uncovered; the unknown phenomenon is finally correctly understood, the Simulator incorrect programming is discovered, it is uncovered the previously unknown phenomenon had been discovered before your accident, and your accident was even predicted as possible.

But the investigation results are published and the finding is the accident was caused by your error of turning the steering wheel the wrong way on the ice. Nobody else is found to have made an error in the stated conclusions but you; it is simply a case of driver error. Do you feel you have been screwed? This happened to the TMI operators.

For everybody out there who doesn’t like my conclusions, I’ll just say that many of the principals of the investigations are still alive, but choose not to talk, so simply ask them. Especially the principals in the GPU vs. B&W law suit which should have determined any liability issues. Ask them why it didn’t happen. My idea of justice involves getting the truth, the whole truth, and nothing but the truth exposed. That process is still unfinished.

Update (March 26, 2015) Small formatting changes, word capitalization changes, and section title changes made. All original wording remains the same. End Update.

Thank you for this article — it explains something I could never quite grasp. Background: I was Manager of nuclear safety at Ontario Hydro on that fateful day. The even sent us off on a multi-month search for “lessons learned”. We read the admonition about never letting the pressurizer go solid; our puzzlement grew into a question: “Why in hell not?”. You may not know it, but OH operated 4×500 MWe units at Pickering WITHOUT PRESSURIZERS, and subsequently built and operated four more. Those units (six still running) operate in solid mode 100% of the time. They incorporate a power-indexed secondary side pressure setpoint droop on the secondary side, of course. Oh, and they do incorporate liquid pressure relief valves, as per ASME Code.

The most intriguing lesson came from a recently-retired USN engineer-officer, who asked essentially the same question as above: “Why in hell not”? The statement was made in the question period following a brief for Canadian safety engineers conducted in the NRC Head Office. The shocked silence that followed made me think that the USN-retiree had done something messy in the middle of the conference table. He didn’t say one more word, that day.

By the way, a shift of operators at Bruce NGS experienced much the same sequence of events later that same year. Thanks to the TMI lessons, they operated correctly and survived the event and lived to err in some other way on another day.

Regards

Dan

Dan, “Why in hell not?”, is an interesting observation. The new post TMI Symptom Based emergency procedure guidelines for B&W plants have a new section for total loss of all feed water (both main and emergency). There is only one way out of that box, initiate HPI and pump water through the Pressurizer PORV and code safeties to cool the core, until feed water can be regained. Hind site is 20/20, but it also doesn’t hurt to actually analyze events outside the scope of plant licensing events. That approach was a huge plus benefit out of the lessons learned.

BTW, when I was an instructor at one of Rickover’s Navy training plants in the ’60s, we actually pumped the plant solid at power because he wanted data to develop Navy procedures for operation with an isolated Pressurizer. After a week of nervous training the actual event turned into a yawn. mjd

Thanks Rod and Mike,

This is a very clear description of this most unfortunate event for the nation (not a “disaster” as nobody was injured or received any harmful dose, despite public Salem-witchhunt-like misperceptions). No, the unfortunate legacy of TMI is the alternate universe we live in still dependent on fossil fuel for so much of our energy, when we could have headed for a world with several hundred more 1000 MW nuclear plants providing most of our electricity, be well on our way to mostly electric cars and be somewhat on the way to electric home heating (at least south of I-70).

Mike’s story shows in such clear terms the need for effective, precise operating experience programs that produce actionable information that when used will prevent another incident. This applies to any hazardous industry.

I hope to spread the word on this post and Mike’s story. Thanks again.

That was GREAT Mike. Thank you for that. As someone who has made an effort to study these events the thing that always bugged me about TMI II was why didn’t someone refer to a steam table? Why didn’t they realize that the RCS was saturated? Was the training in place at the time such that it was impossible to get boiling in the primary of a PWR?

I also wonder what happened to the operators that were on duty? With the blame that was paced on them I imagine that they probably lost their careers?

Sean, I can’t really answer the why they didn’t figure out the Sat. If you look at my slide show, towards the end in “Prolog” heading I speculate, but I can only speak for my own thoughts accurately. One thing is different, I discuss the “slow period”, at least I got a break and I never moved forward of the RO desk so I maintained oversight. My guys were handling the small stuff. And my boss (staff SRO) took over the Make-up Pump panel operation to give us extra hands. So I actually had time to really focus on what I was seeing. If you look close at their time line, they about never got a break from one-thing-after-another. Also as near as I can tell, the actual unit supervisor didn’t get there immediately, the site supervisor did. So I can’t judge how much of a normal op “team” was trying early as a practiced team when it really counted. I also believe it actually helped me that this was my first ever Reactor trip in a commercial plant. I had no expectations (or bad habits) other than the plant would respond according to my training. When it didn’t, my nature is to think “I smell a rat, what’s really going on here.”

I just flat don’t remember any PWR training discussing boiling in the RCS, ever, including my navy training, back during ’70s time frame. In fact the common current operator term “sub cooled margin” was never heard of in operator training (or procedures) pre TMI. Probably hard to believe that, but it’s true. That is the whole point, the PWR industry did not understand a PWR fell to Saturation for a Pressurizer steam space leak and pushed the Pressurizer full. mjd

@mjd

As someone whose nuclear training began in 1981, a little more than 2 years post TMI, I can testify that understanding Psat – Tsat was an integral part of Navy operational training. I cannot speak from personal experience for the commercial industry, but I have heard that they also learned that particular lesson. I don’t think it took too long before the simulator programs incorporated the correct plant response to a steam space loss of coolant, even if there was not much public discussion about why reprogramming efforts were needed.

Rod, I totally agree with your time frame comment, But I qual’d on my first Navy plant in ’67. Agree with your simulator upgrade comment too. B&W had theirs fixed less than a month after TMI. They kludged it, it still couldn’t calc 2 phase flow but the Pressurizer level showed the right direction for Sat.

In the US Navy, there was an operating curve that Reactor Operators were required to comply with during critical operation.

If you complied with the allowable areas of that particular curve – you avoided both low and high RCS pressure limitations associated with those plants.

The fact that a Navy Nuclear Operator states that they newver knew the basis of the operating curves is an eye opener for me.

@Rob Brixey

While I agree that the operating curve has long been a feature of the training provided for certain Navy nuclear watch stations, even before TMI, many of the people who moved from the Navy program into operator positions in the commercial world spent little or no time in maneuvering.

They were not all EOOWs or ROs and did not have exactly the same training syllabus.

Rob, I agree with your observation about an operating curve (with a P vs, T box for critical operation limits). We had one too, and it was well understood, including the basis for the box limits. Please review my slide show, we were inside that box, until the Pressurizer level approached it’s limit for critical operation, when the RO manually tripped the Reactor. The crap hit the fan after that, post trip. RCS P fell to P Sat, while the Pressurizer level swelled to off scale high from the Loop boiling. Totally a new situation within our understanding.

The new EOPs, and operating curve that goes with that, now has a computer generated P vs T display curve. with a Sat line, and a ” 20F sub-cooled” line above the Sat line. When the plant P vs T plot goes below the 20F sub-cooled margin line, you must HPI, and don’t ever turn it off while below that line. All the way down to either DHR removal entry conditions, LPI injection flow, or and empty BWST which requires Containment Sump recirc switchover.

Simple, clear, a quantum leap forward for plant emergency ops. One of the benefits that came from the accident.

Thanks Mike, I appreciate the lesson. As a reactor operator, I fully understand the Monday morning quarterbacking syndrome. To partially answer Sean’s question above, San Onofre had 3 guys who were at TMI during the event including the individual who’s actions started the whole affair, the Full Flow operator (no blame here, the check valves in the air system failed). One of the guys told me in about 1985, the NRC had only recently stopped pestering him.

Although a little off topic, I was curious as to whether your RCPs were damaged from operating without adequate NPSH.

Also, it appears in your description that DBNPP did not have the ability to feed both S/Gs with a single AFW pump–is that true?

Before San Onofre through in the towel, we had become so much better in the simulator than when I first licensed in early 93, and had learned so much and so many better ways of doing things, that it still amazes me why we didn’t know those things in 1993.

Lastly, Mike, we in the industry who came after you have profited mightily from the experiences you had and my hat is off to you guys. I know by experience, thankfully only in the simulator, what it’s like to wonder “what the heck is going on?”

Dave, no damage to RCPs, but about 2 days of testing to prove it. NPSH was just a short term condition and pumps could take it. The couple minute run time with de-staged RCP seals was a bigger concern, much test data was taken and analyzed to come to that conclusion. There was even a “core lift” analysis done but not sure exactly why, as that problem is 4 running pumps below 525F (colder water, more core delta P, can lift fuel off lower grid & push up against top hold down springs), and we had 2 off early. Maybe an STA (wink, wink) can explain that… pumping bubbles causes higher core delta P?

Yes our AFPs can feed & get motive steam from/to either SG. My slide show sketch shows feed side cross ties, but not steam side (drawing too “busy” already). But the actuation logic has to do it (or operator over ride). When it sees <600PSI on an SG (steam leak) it will stop AFW to that SG and line that side AFP up to the "good" SG getting steam also from the good SG. So 2 pumps end up on the good SG. That is what would have auto happened if we ignored the "low steam P block permit" alarm we received.

What the system will not do is feed 2 SGs with 1 AFP. Pretty sure that is because design size is each pump is sized for 1 design DH load, safer & simpler to do that on one SG. AFPs can also get steam from Aux Boiler, suction from service water, etc. We also had an electic Start-up Main Feed Pump, but did not need it in this event. mjd

” There was even a “core lift” analysis done but not sure exactly why, as that problem is 4 running pumps below 525F (colder water, more core delta P, can lift fuel off lower grid & push up against top hold down springs), and we had 2 off early. ”

I believe the concern here is that the higher momentum of the colder, more dense RCS fluid could possibly displace structure / components in the core. I know at SONGS and St. Lucie the fourth RCP was not started until RCS temperature was over 500F. The AP1000 has variable speed pumps with the lower speed used at lower temperatures.

After re-reading your post (and a cup of coffee), I understand your puzzlement. The only concern I could envision would be some kind of water-hammer under two-phase flow. Off the top of my head, I wouldn’t expect a significant void fraction, nor void / liquid separation between the RCPs and the core to lead to conditions causing water hammer.

I worked on that SONGS simulator. I wonder if they are still keeping it for E-Drills or have they sold it off at auction. The night before they announced the plant closure, I was doing calculations to benchmark the transient response of the simulator. I got the news the next morning. I still have the draft of that report and the compute code output files which I use to ballpark certain responses on the AP1000 as there are many similarities – 4 RCP / 2 SGs, almost identical Mwt.

The simulator is NOT being used as there is nobody left who knows how to run it. I believe they intend to sell it.

Thanks for sharing this Mike, and for presenting it Rod.

I agree that the TMI operators were “set up” to some extent by inadequate training, procedures, and equipment. But as mentioned above, were they not at least trained at that time, either by the Navy or the utility, on use of a steam table?

And unrelated to the HPI issue, do you have any special insight as to why the Emerg FW (“12”) MOVs were left closed? And why they were not designed to receive an auto-open signal on EFW initiation?

Also, any insight regarding the initiating event? I have heard both that the instrument air and service water systems were (inadvertently?) crosstied, but also that the water intrusion was simply the result of a stuck IA check valve.

Thanks in advance for your response.

@Atomikrabbit

Have you read the following three posts and the related discussion threads?

https://atomicinsights.com/three-mile-island-initiating-event-may-sabotage/

https://atomicinsights.com/sabotage-started-tmi-part-2/

https://atomicinsights.com/sabotage-tmi-part-3/

Even though there were many specific differences, it is interesting to note that the “reactor accident” depicted in the first half hour of The China Syndrome also featured an operating crew that stopped the high pressure injection system when they had an indication that the pressurizer level was off scale high. The often pictured scene of Jack Lemmon with the worried face and sweaty brow was from a time when he was trying to figure out where the water was coming from.

Weird, huh?

“it is interesting to note that the “reactor accident” depicted in the first half hour of The China Syndrome also featured an operating crew that stopped the high pressure injection system when they had an indication that the pressurizer level was off scale high.”

If I recall correctly, the dialog was a mish mash of PWR and BWR terms. I think they refer to Reactor Feed Pumps etc.

I have heard that that the control room scenes were shot in the Trojan simulator. Seems unlikely that a utility would help the production of a blatant anti-nuclear film. But stranger things have happened.

@FermiAged

Sure, there was a miss mash of PWR and BWR terms. Poetic license was certainly a part of the story telling. The technical advisors to the movie were GE BWR engineers – the infamous GE Three – and there was a lot of involvement from the activists at the Union of Concerned Scientists.

In certain scenes, it appeared to me that the real facility being depicted was Rancho Seco, which, like Davis Besse, and TMI was a B&W 177.

I found the film online at:

https://archive.org/details/ChinaSyndrome

I haven’t watched since it first came out in 1979.

It felt entirely BWR. The language/jargon was BWR jargon. The scenario lined up with a very likely BWR post trip response (sudden level drop, post trip, which wasn’t detected due to a stuck indication). The actions in response line up with what was expected at the time. Even the “the book says you can’t do that” makes sense, because in The China Syndrome they violated their 100 degree F/hr cooldown rate in order to use their LPCI (low pressure coolant injection) pump.

@Michael Antonelli

What about the part when they stopped injection because the stuck level indication showed that it was “out of sight high” and Lemmon was trying to figure out where the water was coming from? What about the part when the accident was halted by shutting isolation valves for what appeared to be a stuck open relief valve?

The China Syndrome fictional event that has some echoes of the Three Mile Island accident starts at 12:40 and is declared to be over by 21:33.

https://archive.org/details/ChinaSyndrome

Though most of the terms used are BWR terms, the crux of the matter is a reactor water level indication that is so high that it causes the operators to take actions that are not in accordance with “the book” in order to address that particular indication. Once the operators realize that the indicator they were focused on was wrong because it was stuck and that water level is actually too low, they take other actions to reduce plant pressure and allow low pressure injection pumps to put water into the core, thus avoiding core damage – that time.

As we all know, The China Syndrome filming was completed well before TMI and the movie was in the theater when the accident happened. I am pretty sure that the movie was not completed when the Davis Besse event happened.

BWRs, as they operate at saturation conditions, have many causes of false high level indications, in addition to true high level conditions like I described above. Some examples of causes of false high level indications include rapid pressure reductions/steam throtting changes, ‘notching’ of instrument reference legs, “Rosemount Syndrome” (A failure mode of rosemount transmitters in the 70s/80s). The worst false high level condition is caused by drywell temperature increases. Operators need to be very aware of these things, as you can falsely believe your reactor inventory is adequate (See Fukushima unit 1 response, their drywell temp was very high, and their instrument reference legs were all in saturation and indicating false high. This affected accident response/decisionmaking).

In the film, they halted the event in the film by reducing pressure with SRVs to inject with LPCI. The LPCI (low pressure coolant injection) system has a ~300# shutoff head, while BWR post scram NOP is around 900#. In a BWR design, a low-low level alarm automatically shuts the main steam lines, so SRVs would have to be used to reduce pressure and get LPCI’s injection permissive met. That’s when Lemmon opened the 4 relief valves. Going from 900# to 300# busts the 100 degF/hr cooldown limit (post scram I’m not allowed to reduce pressure to < 500# for the first hour unless an EOP override is in effect. These overrides and EOPs did not exist in the 70s).

I did not see them close a block valve or isolate a leaking system to end the event in the China Syndrome. (Added notes: I ran a loss of all high pressure feed in my plant's simulator. After the initial level drop and stabilization, it takes about 1 hour for inventory to reach top of active fuel, so the movie obviously sensationalized the timelines). I'm a BWR operator.

Post scram in a BWR, your level drops about 50-60 inches in 3-5 seconds due to shrink/void collapse. At the same time, steam flow throttling losses go away and the pressure regulator response will cause a rapid 100# change in vessel pressure. This makes Feedwater sees a large level deviation which winds-up the controllers and over-feeds the vessel to the point of flooding the steamlines. The steamlines are well above the normal level indications (narrow/wide), and are only visiable on the upset and shutdown level indications (which are non-safety and powered by non-vital busses, and typically not even near the main feed controls). GE trained operators against flooding the steam lines in a BWR almost as hard as PWRs trained against going water solid. Water in the steam lines is an ASME emergency service condition. In the 70s, BWRs did not have vessel overfill protection, and several overfills occurred. Today there are high level trips your feedwater and high pressure injection systems to prevent it. But back in the 70s, and even today in many plants who don't have a digital feedwater system, the post scram response that an operator is required to take on a scram is to first, immediately trip 1 feed pump and run the second feed pump to minimum flow in manual to prevent vessel overfeed.

I think I may have done a poor job in my above reply getting at what I was trying to get at. The fact of the matter, is that in both plant designs, there are conditions that operators must avoid (PWR = solid/ BWR = steam line flood), in both designs there are many things that can cause actual and false high level indications, and in both designs, operators were over-trained to respond by shutting down high pressure injection as a result.

Sorry if I'm a little all over the place with this response. Also, I really liked this article, great job to you and mjd.

@Michael Antonelli

Thank you for the detailed schooling on BWR water level control. Your comment did a pretty good job of poking a big hole in one of my favorite pet theories regarding the initiation of TMI. The incredible timing coincidences still nag me; I generally don’t accept the idea that a string of low probability events will happen in just the right sequence especially when I see that there are identifiable interests that benefit from the string happening.

However, your comment helps me recognize that this particular coincidence was most likely not caused by someone connected to a big budget Hollywood movie that was not doing so well in the box office and who decided to increase its audience figures by forcing life to imitate art.

I just watched the movie for the first time since it came out when I was a junior nuclear engineering major. Some things that struck me:

No hearing protection. Even in 1979 this was a no-no.

They had HPCI valved out for maintenance. Pretty lenient Tech Specs.

NRC asked why alternative instruments weren’t looked at to confirm reactor water level. Godell has a blank look. However, another level indicator WAS checked – that is what clued them in to the stuck pen.

Ventana drawing shows a B&W OTSG. Dialog appropriate for a BWR, however.

Operators and Kimberly Wells nonchalantly sit on control boards and place bags, jackets over controls.

At the Foster and Sullivan work site, radiography of pipes is taking place with people casually walking about.

Does it really take an hour to figure out how to trip the plant?

The RCP looks more like a pump for a PWR than a BWR. Nevertheless, how could a plant be so seismically flimsy (particularly in California) that a RCP vibration could show up in the control room?

How does the plant Sequence of Events computer know when an “event” begins and ends?

I don’t ever remember armed guards ever being stationed in control rooms. The control room was on their rounds but not as a station. At least not at any plant I have been to.

Don’t they have to pull the Shutdown Banks before the Reg Banks to go critical?

These operators were totally non-professional, even by 1970’s standards.

Notice that whenever a pro-nuclear person spoke at the hearings, the dialog was muted and the scene quickly changed?

AR, I don’t have any special specific insights, other than “been there, done that, walked in their shoes” And I know how I process multiple problems, just like you do, one rapid conversation at a time inside my head, using my language. But I can only have one conversation with myself at a time. This is not a wise crack answer at all, it is a real limit on one’s ability to focus on several concerns at once. I think it is the direct cause of my delay between wondering why the P stopped dropping and putting together the P vs level relationship. My priorities got constantly changed by other concerns going on, so I shifted the conversation to a different subject. I think this I one of the hardest things for a non operator to understand in these events. There is a human limit to how much info you can process in these stinky ones, And operators will always inherently seek the answer in their training. One lesson I reinforced by my event was maintain the team, when the “big flick” guy gets info over load, you are on the slippery slope.

I’m going to publish an extensive e-book on this whole subject, I discuss how these events get autopsied. Teams divide up in piece parts, with a narrow single piece focus and make a conclusion. The conclusion is correct in that single focus environment. That ain’t the Control Room in real time. That really is something you must experience to understand. We had ~800 annunciation alarms in our control room. In five minutes we had ~300 of them flashing. That’s an operator’s reality, not a table top environment.

A good write up about initiating event (hose) specifics is here:http://www.insidetmi.com/

I read them at the time.

This seemed like a rare opportunity to ask an actual licensed B&W operator from the TMI time period some questions that I have had. Not accusations, nor fault finding, but genuine questions.

If I am reinventing the wheel by asking something that is already apparent to all, then apologies.

@Atomikrabbit

I’m not trying to inhibit questions or discussions, just trying to make sure that you were aware of the background provided in those posts. I try really hard, but I cannot keep track of everyone who has participated in each discussion here. 🙂

Right. And although I read the original blogs and the comments at the time, I understand it’s quite possible my questions were completely addressed by subsequent commenters.

Besides, I’m trying to work on my “humility” (whatever that is), and I think I’m getting really really good at it! 😉

Atomikrabbit, I’m fine with any tech question. I think my post speaks to my opinion about judgmental conclusions. I’ve lived with that for 35+ years. Rogovin Report accused me of cognitive dissonance and operator error, and used my name in the document. I live with that; but even they didn’t ask me what a steam table was (I do know, I worked in a cafeteria). So I don’t resent any questions. Besides, I hung it out there with this post. But it really is about an impossible thing to understand an operator’s job unless you have been there. So I generally don’t even try to explain it to non operators. One thing everyone should keep in mind. There are operators reading this blog. When they don’t pipe up, and defend them self any more because they have “circled the wagons” in response to constant second guessing of their actions, you have a real problem. And I see a general trend on nuke blogs of very little operator comments.

FWIW, I’ve been licensed on W plants since 1983, sim instructor since 1996.

So the question for now is, did the 12 valves get an auto open on EFW initiation? Was there no alarm indicating they were in off-normal (closed) position? Shiftly log readings on their position? Since they were MOVs, I assume they had handwheels?

I realize D-B not TMI, and devil in details, but I think its safe to assume the plants were very similar.

Looking forward to your e-book, please advise us when it is available.

Atomikrabbit, I think you would be amazed today at the differences between the ’70s design AFW/EFW systems among the B&W plants. Almost everyone of them was different, except maybe Oconee because Duke built their own plants. Some of that was driven by regulations of that era. In the earlier plants they were not even Safety Grade, but a category called Important to Safety. If your plant was finishing construction towards the end of the ’70s it was about a “coin toss” what you’d end up with. At DBNPP we got held hostage (our license) over High Energy Line Break rules, but I think TMI 2 (slightly later) didn’t. Six months before we were scheduled to take initial operator license exams they installed our Safety Grade Steam/Feed Rupture Control System. Coming complete with new Tech Specs, Surveillances, a wall and whip restraints in an already crowded room, etc. I think the only common design requirement was the ability of one train to remove post trip decay heat.

Even its trip functionality was foreign to us operators, it wasn’t 2 out of 4, it was 1 out of 2 times 2. Then, since it was being back fit on a completed MFW system and had to be single failure proof we ended up with some half trip valves. The one that started this event was such, a single spurious trip of one SFRCS channel tripped it closed, causing a loss of MFW to SG 2. All of its original instrument inputs were “bouncy”, especially at low power. It takes operating time to sort that stuff out, but you know how that goes when people smell those generator breakers about to close.

The system we trained on at the Simulator was for Ranch Seco, which used the normal plant control system to control SG level on AFW/EFW. The one we started Power Ops testing with used the new SFRCS. So no, the commonalities between the plants are not there.

The TMI folks, of that era, I’ve talked with about the 12s say their Plant Process Computer did not even log most valve position changes at that time. Which in theory could show the closing/reopening of the 12s during the test run 2 days before the accident. The 12s are a dead end. Whoever knows ain’t talking.

mjd, the ‘constant second guessing’ never goes away. We need to be self-critical and be willing to honestly assess our performance, but the pressure to be perfect, to be corrected constantly over the minutia is, I believe, corrosive over time and distracts from the big picture. I am probably wrong, but I blame INPO for a lot of this and feel they do a better job at insuring they’re always needed than they do at actually making us better.

Operators do not get involved much. Few of us go to company functions/information meetings, etc. I am currently battling the anti nukes in my local newspaper, San Clemente Times, and it is difficult to motivate others to contribute to the battle. Who wants to go to a community meeting and listen to 30 speeches attacking what you do? In this industry we are used to dealing with people with integrity, at these meetings one is confronted with those who have none.

When SONGS was under fire, I distinctly remember being told in no uncertain terms to leave the public response to corporate communications.

The anti SONGS people were pushing a scenario about how a MSLB would cause such a high pressure drop across the SG tubes that the ones that were found to have failed their in situ tests would have failed. The NRC asked this question and I did a series of calculations / simulations to show it was not possible – the primary drop from the reactor trip was enough to avoid this situation.

I couldn’t put my results out publicly this to dispel this so I anonymously responded by pointing to a public NRC publication of a MSLB in a CE NSSS that showed primary and secondary pressures differences getting smaller after the trip, never approaching the failure threshold. Lotta good THAT did!

I sensed that SCE corporate management thought SONGS was a burden. There was a corporate publication about the history of SCE and there was only one or two pictures of SONGS and dozens of windmills, solar panels and other fantasies.

For all the public outreach that SONGS did including beach cleanup, science fairs and simulator tours when the chips were down, what good did it do? Did ANYONE from the public raise a voice in support? I only recall that AFTER it was announced, some local businesses lamented the loss of business.

FermiAged, you must know me. I would be VERY interested in your calculations as well as the public NRC publication of a MSLB. Your description of the anti-nukes is dead on because that is exactly what they said–most of them are clueless as to what it all means.

I no longer have the calculations in my possession as we had to turn over anything having to do with the steam generators that might be proprietary or have some kind of relevance to a potential legal action against MHI.

The public calculation I referenced when addressing the anti-nukes is here:

http://www.osti.gov/scitech/servlets/purl/5025979

It is a 1980’s study of a CE MSLB concurrent with a SG tube rupture by Brookhaven National Lab. Although dated, the results using current codes looks about the same (at least thermal hydraulically; the tricky part is the asymmetric core power distribution).

Primary and secondary pressures are given in Figs. 10 and 13, respectively. The anti-nukes believe that the secondary pressure drop is so rapid that there must necessarily be a large primary to secondary differential pressure. They ignore the fact that primary pressure also drops rapidly due to the reactor trip and subsequent cooldown. The maximum pressure differential remained well below that which resulted in failure during in situ testing.

I know you by name but I don’t believe we were ever introduced. We would probably recognize each other.

Are you still at SONGS?

David, I couldn’t agree more. And you have hit one of my concern triggers. It’s beyond the scope of this thread so I’ll be brief. INPO did a lot of good in the ’80s, mainly convincing the plants to do Preventative and Corrective Maintenance, and Engineering Root Cause analysis the Navy way. But once everyone really believed that message, why do you need INPO? That effort is directly responsible for raising capacity factors from mid 60% to 90% plus. The downside is the plants run so well the operators don’t get challenged, except on the Simulator, so that training better be exceptional. When I see an old time operator commenting (on a blog) that he spent 2 hours in a critique for a 1 hour Sim session on an INPO SOER; as he said “Where’s the beef.” When I see an NRC inspection report with 40% failure rate on an operator written requal exam I have to wonder… was this a surprise? Do they have a QA organization? Is anybody listening to their operators? When I see a 361 page report in a Corrective Action System for a minor problem, I have to wonder what is the overhead to keep that process in place. Including every 5th page has 7 signatures!

INPOs slogan “Striving for Excellence” was a great goal in the ’80s when the bar was pretty low. We’re past that, now it equates to “LNT”, it not only costs overhead, but it demoralizes the operating crew. But it does provide jobs for clueless nit pickers. I’ll stop.

I am really looking forward to this e book. I hope that my question was not taken the wrong way. I was just trying to delve further into the training that was given at the time and frankly I think that any operator could have been in that position at that time unfortunately it was Frederick, Zewe, and the other operators at TMI 2. I am glad they were able to move on in the industry. What happened was not fair to them.

That is amazing to me that DBNPP precursor event was your first commercial plant trip, wow.

Again, thank you for sharing and answering our questions.

Sean, ” I was just trying to delve further into the training that was given at the time…”

Here’s a good start, from the Essex Corporation, the Human Factors experts hired to do the training portion for the Rogovin Report. You really should read the whole section.

A quote from the e-book:

• Summary conclusions in the Rogovin Report by the Essex Corporation, the Human Factors Engineering experts hired by NRC during the Rogovin Investigation to look at the TMI Operator training.

Operators were exposed to training material but they certainly were not trained.

They were exposed to simulators for the purpose of developing plant operation skills, but they were not skilled in the important skill areas of diagnosing, hypothesis formation, and control technique.

They were deluged with detail yet they did not understand what was happening.

The accident at TMI-2 on the 28th of March 1979 reflects a training disaster.

The overall problem with the TMI training is the same problem with information display in the TMI-2 control room application of an approach which inundates the operator with information and requires him to expend the effort to determine what is meaningful.

Well… at least somebody “got it.” Too bad they were never asked to identify just who had made an “error.”

Poor people operating the plant. that must have been a difficult time for them, then to be left out to hang as this suggests.

Im not trying to diminish the incident by what I say next. It was bad in a expensive mistake way; by technological and training issues it looks like. But not really that bad as industrial accidents go. It was probably more on the spectacularly wonderful possible outcomes side of that class of incidents. Thats how I am beginning to think about it now.

Its weird I know far more about TMI and hear about it far more today than the Bhopal chemical disaster or the Banqiao Dam failure; both on a industrial tragedy scale make TMI truly insignificant. And whats really sad is even by judging energy accidents since in the US, in terms of people harmed; after all that feverish reporting and media frenzy then and the references now, its still insignificant.

Hats off to the technology and the operators. Even if it wasn’t perfect in hindsight. If thats as bad as it got, early on, with older technology we clearly were on the right track. I think events at Fuku even strengthen that conclusion.

Well I am not as technically proficient obviously as you guys in areas of N plant operation but after some consideration here is some thinking out loud about the general implications of a accident :

Obviously buoyancy, mass, and potential dilution plays a role in all these types of industrial incidents. I am wondering now if NP does not seem to be unnecessarily singled out as the most threatening by very bad reasoning. Obviously, firs off it doesn’t work anything like a atomic weapon. Also a plus with reactor type incidents is that any release will likely be delayed from the initial incident, even be foreseeable and also be quite hot and therefore lighter than the surrounding air.

Anyway here is the weather map for that week.

( http://docs.lib.noaa.gov/rescue/dwm/1979/19790326-19790401.djvu )

I feel like the radius maps that are so commonly displayed with respect to nuclear reactors are misleading at best and evacuation zones based on them and misconceptions of low dose radiation are they themselves are potential safety hazards that are arguably, in some cases, much more threatening than potential radioisotope releases.

Meltdown at Three Mile Island ( http://www.pbs.org/wgbh/amex/three/filmmore/description.html ) simply illustrates the public fear and touches on evacuation issues. [Also the hydrogen bubble thing. I dont get that.]

@John T tucker

That is an interesting account of the events. It was sadly amusing to me to note that Roger Mattson’s name is used when he gets the last word, but it was conveniently replaced with a non specific “scientists” in the following passage:

Mattson, was not really a scientist, but a frightened little regulator specializing in modeling the behavior of emergency core cooling systems. He had insufficient plant operating or engineering experience and was the source of the controversy because he could not get a grasp on the fact that hydrogen will not explode without plenty of available oxygen.

Light water nuclear reactors always have some excess hydrogen; we use it to scavenge oxygen that is released when water gets disassociated in a neutron flux. There was never any danger that a hydrogen build up inside of an intact reactor coolant pressure boundary would explode. It just needed to be gradually vented out using a slightly modified “degassing” procedure.

http://www.pbs.org/wgbh/amex/three/peopleevents/pandeAMEX88.html

In an action that still irks some operators today, the NRC saw fit to give Mattson a cash award for his “performance” during the event. I may have the numbers wrong, but I think it was something like $15,000, which was a considerable sum of money for a bureaucrat in 1979.

A hydrogen explosion, and I thought it was possibly some complex reaction I didn’t know about. On the public side of things, including communications with civilians, local governments and especially the press the whole thing seems rather poorly handled, explained and even less successfully communicated at best.

@John T Tucker – You’ve got that right. There were poor communications all around from the government, the utility, and the vendor.

I just found this:

Three Mile Island anniversary: the lesson the nuclear industry refuses to learn ( http://www.csmonitor.com/Environment/Energy-Voices/2014/0328/Three-Mile-Island-anniversary-the-lesson-the-nuclear-industry-refuses-to-learn )

Areas as far as 300 miles away from Harrisburg were advised they might need to evacuate….

….the nuclear industry worldwide has not learned the most basic lesson of Three Mile Island – to get accurate information to the public in a timely manner….

He talks about the hydrogen thing too. I think he makes a good point despite the first impressions from the sensational title. Accurate being the key word here and technically relevant and in correct perspective he probably should include too considering his assessment of San Onofre.

Despite the mistakes the most common thread that runs through it all, up to today, is probably media sensationalism. Thats the ubiquitous lowest common denominator seemingly in all but technical nuclear power reporting.

@david davison April 11, 2014 at 10:10 PM… ” I am probably wrong, but I blame INPO for a lot of this”

From April 11 NRC event reports.

OFFSITE NOTIFICATION DUE TO A DEAD DUCK FOUND ON SITE

“Monticello Nuclear Generating Plant personnel discovered the remains of what appeared to be a deceased duck on plant property. The cause of death was not immediately apparent, no work was ongoing within the vicinity at the time. Notifications to the Minnesota Department of Natural Resources and the Division of Fish and Wildlife will be made for this discovery. This event is reported per 10CFR50.72(b)(2)(xi).

“The licensee has notified the NRC Senior Resident Inspector.”

Plant personnel could not determine if the duck was an endangered species.

I can’t help but wonder if there is a back story here, was an Operator overheard saying “If INPO finds out about that, he’ll be a dead duck.”

All kidding aside, this is an example of just part of the regulatory burden all nuke plants have to add to their overhead. It is pathetic. Do dirt burners report this stuff? And folks really wonder why nukes throw in the towel. They are being financially strangled by crap. And as Rod frequently points out, most of it is part of the “plan.” mjd.

Turd burners, NG, etc. probably are supposed to report on stuff like this. Who is to know if they don’t? There is no NRC on site day after day so any dead duck is either buried or eaten; problem solved.

The research reactor at Battelle used to have to file security reports all the time because they had their perimeter monitoring motion sensors tuned up so high that rabbits and squirrels were setting off the alarm every time they darted through the exclusion path around the fenceline. More reports and “incidents” to file.

Mike, I used to work with you on some of the DBNPS Tech Spec CBE systems we developed for the training department back in the ’80s. I think all that got deleted when they had the one “purge” of training dept. personnel.

Wayne SW, sorry I can’t place you. That was a long, long time ago, on a planet far, far away. And “CBE” doesn’t ring a bell either. I left the training department ’81-ish. I went to the department that was responsible for development of the new Vendor Guidelines, through the Owner’s Group, that was to be the Technical Basis for the new Symptom Based Emergency Procedure. Also worked on the SPDS development.

I so believed in the new EOP concept that once I finished writing the procedure, I V&V’d it at the B&W Simulator, did the class room training sessions, 50.59’d it for Station approval, and we used it for the upcoming requal training cycle at the Simulator. Then the real work started, fixing my bugs, from the Operator comments from the Simulator Requal sessions. Rewrite, retrain, etc.

I believe were were the first plant to make the shift, even before NRC approval of the Vendor Guidelines, which was actually unnecessary under 50.59. If I remember correctly we made the shift over during a refuel outage.

CBE=computer-based education. It was the old CDC “Plato” system that used on-line instructional touch-screen terminals. One of the first uses of that technology in that application. The development contract went to Ohio State and I was part of that team. I met with you a couple of times to review materials. The screen name is just a pseudonym. I’ve had my share of stalkers on the internet so I don’t like to put my real name out there if I can avoid it.

We also put in a bid to do training on the ATOG system which was new at the time, but didn’t get that contract.

Wayne SW, yes Plato, now I remember. You probably worked with “wto” below also; we were the total Operator Training Dept in those days! WTO single handedly set up our non licensed operator training program; zone quals, qual cards, training goals, objectives, lesson plans, etc. all pre-INPO. He also did all the NRC pre-license training at the same time, and got a Eng Degree at night. He’s the guy I sent into containment to look after the event; I sure wasn’t going to go… I had caused it. But I did sign the RWP!

I remember how resistant every one was to CBE back then, too novel, now it’s probably mainstream. There was also resistance to small part-task trainers (sims) that are now taken for granted. You guys were too cutting edge for a stodgy utility. Tell B.H. I said hi; I see he’s a consultant to ACRS these days too. Go Buckeyes!

Rod et al,

I thought you might like to read a CNS paper I wrote in 1995 about a very interesting loss-of-coolant incident in Pickering Unit 2. It was handled very well because the operator understood what was happening and did not intervene in the automatic activation of the ECCS. Very important lessons were learned that were immediately shared with all the CANDU reactor operators. The paper is in dropbox.com and available at: https://db.tt/ujp6msro

Fracture of the rubber diaphragm in a liquid relief valve initiated a loss of coolant in Pickering Unit 2, on December 10, 1994. The valve failed open, filling the bleed condenser. The reactor shut itself down. When pressure recovered, two spring-loaded relief valves opened and one of them chattered. The shock and pulsations cracked the inlet pipe to the chattering valve, and the subsequent loss of coolant triggered the emergency core cooling system. The incident was terminated by operator action. No abnormal radioactivity was released. The four units of Pickering A remained shut down until the corrective actions were completed in April/May 1995.

Jerry, very interesting event. Do you know if Simulators are used in operator training for these units? And if so, was something similar to this event actually trained for, before this event occurred? I know Sims can not always mimic the exact failure mode or location, but something where the principles of the plant response are accurate. You said the Operator understood what was happening, curious if it was from classroom or Sim training?

“Are you still at SONGs?

FermiAged, still hanging on by my finger nails. 23 licensed ROs, they call us certified operators, and about a dozen or so SROs.

Mike,

I find your description of what really happened at TMI very compelling and does provide vindication to the operators at TMI. I grew up as a Navy Nuke serving from 1968-1974. I had the opportunity to be both a prototype staff instructor and serve aboard a fast attack submarine. I then entered the civilian nuclear industry and held virtually every position up the operations chain from AO to Ops Manager at a B&W plant.

I was RO licensed in 1978 and SRO in 1979, as you see, both before TMI. I did hold my RO license for two years and SRO for eleven. I went on to ultimately become VP nuclear at a BWR. After retiring, I served on a number of utility Nuclear Safety Review Boards so I do consider myself capable to make comments on your article.

I received the same simulator training as you, and yes we were emphatically “trained” at B&W to shutdown HPI if pressurizer level exceeded 290″. You are also correct in stating that you would fail your comprehensive exam if that was not done during a SBLOCA. I must admit that our training overall back then was clearly not as substantial as operators receive today with the INPO accredited training.

Our procedures back then left a lot to be desired compared to the information and formatting of the current operating, abnormal, and emergency procedures. However, in some respects, “older generation” operators did have to prove their integrated system knowledge better that today’s operators.

For example, in my days, both in the submarine Navy and civilian commercial power, you were required to draw systems from memory. Checkouts today allow operators to use a drawing to demonstrate flow paths etc. I am not saying this is necessarily wrong, I just believe personally that I feel I really did understand my system interrelationships better than what I observe in some operators today.

OK, I have digressed some. The generic simulators back then also were not capable of really demonstrating system response. Back to TMI, as you know, we were not really trained to respond to a SBLOCA in the steam space of the pressurizer, and yes, once we shutdown HPI, we were outside of the assumptions in the design basis for a B&W, and for that matter, any PWR including the navy plants.

In your case, you and your crew were successful, not because of your training, but because you knew something was not right, your procedures were not helping you, and you now were on your own left to the collective knowledge of you and your control room staff. It worked!

It is a shame that our industry was not provided information about some events that had occurred in the international nuclear world and also that B&W decided to ignore from your experience at Davis Besse. TMI would have been prevented. However, I do believe good came from the TMI event. We now have much better procedures, simulators, and training to assist the operators in today’s control rooms.

@wto

Thank you for your perceptive comment and for sharing your experience with in this discussion.

I hope you don’t mind, but I added a few paragraph breaks to your comment to make it a little easier to read in the relatively narrow columns provided on the Atomic Insights web site. I did not make any other changes.

I want to make a general overview comment. First off I need to maybe apologize for not being totally effective in my communication skills. Second, please don’t anybody take this personally. The whole intent of my story was to provide some background insight on some history leading up to the TMI accident. I understand I have a unique view because of my position in the history, but I also understand I may have some bias because of my position in the history. I also realize, just like everybody else, I can be thin skinned about criticism.

The point I wanted to make in the lead-up to TMI was this was not a specific Davis Besse problem, or a specific TMI problem, or a specific B&W problem. It was a PWR problem. All the referenced TMI precursor events prove that. The initial ten minute sequence would have likely been identical in any PWR. At five to ten minutes or so, you have a SBLOCA in the top of the Pressurizer and no HPI on.

Please read the Rogovin Report about the The Beznau Incident at the two loop Westinghouse NOK 1 plant in Beznau Switzerland, on August 20, 1974. And this incident had been predicted by the H. Dopchie letter to the AEC on April 27, 1971. The crux is the misunderstanding of the Pressurizer level response to a SBLOCA in the Pressurizer steam space. So now we add Westinghouse to the misunderstanding.

The gory details are that the Westinghouse Emergency Core Cooling System (ECCS) actuation was dependent on low RCS pressure coincident with low Pressurizer level. This was because they had the Pressurizer level response backwards. So at Beznau they have an event, the PORV fails open, Pressurizer level goes up, and at Time=X they have a SBLOCA with no HPI.

Does that Reactor core really care why HPI is not on? Does it really make a difference why it is not on? An error has been made, who made it?

Now let’s go to the “steam table” question asked. Since you know already knew the answer, you’ve asked it for a hidden reason. But not so hidden. So my message to you has failed. That question is not even relevant to my discussion. I will ask “why should they have even needed one?” And further why did I have to even face this event eighteen months before TMI? That’s the point of my discussion. The design basis was not understood by the designers and trainers, despite the warnings before TMI.

Pre-TMI there was absolutely no accurate understanding, by the whole PWR industry, of the correct response of the RCS to this particular SBLOCA. And the training was wrong and the procedures were confusing. I guess I’m clueless, just what does this have to do with a steam table and “operator error”? Sorry, I just cannot make that connection. The way I connect the dots the TMI Operators were set up to fail by the Institutional Arrogance of the Whole System at that time. And I think that fact should be acknowledged.

@mjd

With all due respect here, I think you might be a little thin skinned and somewhat harsh on the designers.

I’ll postulate that there is a reason, though perhaps not such a good reason, why the designers and trainers did not have a good understanding of the response in a real live system to a postulated event of a leak in the steam space of a PWR.

Based on my continuing investigation of the history of our still quite young technology, the problem was that the trainers and designers had little, if any, experience in operating the systems they were designing and teaching people how to operate. There is little evidence — outside of a few testing programs and inside the somewhat translucent Navy program — of people learning to design nuclear power plants based on the experience of testing physical plants through the full range of events that could happen.

It’s hard for some people to comprehend, but many nuclear plant designers have never even been inside an operational power plant, except perhaps for brief tours. That was especially true in 1979. At that time, I think that the oldest B&W plant had been operating for less than 10 years.